Does your life feel like it has been taken over by “Artificial Intelligence”? I have a very remote relationship to it but cannot say that it is not influential (while I was typing “Artificial”, Word already proposed “intelligence” to follow – machines telling you what is right have been around for a long time, after all).

A warning: this blog comes in two parts. As I started writing, I realized I had been thinking about AI a lot, but never really articulated a position outside of my brain or beyond discussions on one of the many new articles or expert assessments about it. Hence, a lot had been piling up inside me, and the post was getting longer and longer. The first part is about AI as we academics may be dealing with it in our own work, as researchers and knowledge producers; the second is about AI and teaching.

Thinking back, AI became relevant for me the first time in late 2022, when ChatGPT was launched; at the time I simply felt exhausted. It seemed to be a threat to all that I, and many like me, do. A bit like: You are standing somewhere, minding your own business, when suddenly a massive flood comes at you. All you can do is try to keep standing there, but you might be swept away and must focus all your energies on not drowning.

What is it that we do and that felt so threatened? Thinking. Creating knowledge. All of a sudden, it seemed like this complicated work can be done much better, and much faster, by a new invention (I try to resist the all-too-common anthromorphizing of AI here). Why do something that can be done much better and more conveniently FOR you? Sounds like the story of modernization – thinking of the washing machine, the calculator, the computer – what could possibly be problematic about that? Perhaps that your thinking muscles, if you don’t use them, might regress.

There have been – and continue to be – so many prognoses about how AI will change our lives. Some are sensational, some dystopian, others ooze authority about how to best harness this new tool for your benefit and for the best purposes. In the academic world, it seems that many people do the “harness for your benefit/ best purposes” strategy.

I recall the first time I was directly affected by this kind of AI use: a colleague who had agreed to write a letter for my promotion file sent a draft of that letter to me and asked for my feedback (Was everything ok? Did I want to add anything?). I had one or two comments but really did not want to further burden the colleague, as I was truly grateful that they had taken the time. In the answer of that colleague, I learned that the letter was composed by AI. That felt awkward. The letter sounded fine to me, a bit general, but checking all the boxes that a letter of support should check. My gratitude shrank, to be honest. Was I not worth the effort for a REAL person to write a recommendation for me? I get it, of course: writing substantive letters takes time, and we are all chronically time constrained. Sometime later, I heard colleagues talk about their routine use of AI for the many letters of recommendation they are asked to write for their students. The matter-of-fact way they mentioned this was so surprising to me that I could not even verbally express my surprise. Students and former students: as of this writing, I can attest that ALL of the letters I have ever written for you have been human composed. By myself. I admit that I use a certain pattern (where do I know you from, what can I say about your academic achievements, which are your particular strengths …), and I cannot guarantee each letter was of high quality; but I took the time.

I kept thinking about this AI composed letter by my colleague and can say I got used to this new reality. I am not bitter (to quote our favorite Miami comedian Dave Barry)! In retrospect, I should have asked HOW they used it – and could have learned something. Also, I admit that the many letters that I have to READ (like, for applicants to our Graduate Program) are often pretty generic, and sometimes of a quality that suggests AI assistance might have improved them significantly. Still: a piece of advice for all of you trying to get a job or get into a program: I learned from The Professor is In that when a lot depends on it – for example, when writing a cover letter for a job that you ACTUALLY want – AI is not going to get you on the shortlist.

When listening to colleagues – and I typically converse with social science and humanities folks about this – the most common AI use that I hear about is saving time in the complex world or knowledge production. For example, it helps to get an overview of relevant literature in a particular area – you don’t have to read everything from A to Z, and AI may be useful in getting comprehensive coverage/ lead you to literature you were not yet aware of. As an expert in a particular field, you have the ability to assess what AI generates for you, so clearly, this new tool enhances the knowledge production you already know how to engage in. Sidenote: my AI-averse significant other sometimes checks AI tools in the field of his expertise and often gets responses to his questions he is unsatisfied with (from outright wrong to superficial to not based on pertinent literature). I guess this tells us that training a program on “what is out there on the internet” aka Large Language Models (LLMs) does not mean you get good information, but rather a lot of replicated information. This cannot be terribly surprising to anyone – even journalist Andres Oppenheimer, who tells us that he has been using chatbots for getting his news, now warns us that these are not always right (and in fact, get “wronger” with each upgrade).

Is this thought still allowed today: can we conceive of knowledge as something beyond an internet upload? Is it perhaps something inside a human (or even non-human) brain and body and between people with brains and bodies? Something that is alive, evolving, context-dependent, molded by those who create it, use it, reuse it, apply it?

As all of you, I get a lot of news about AI. There is the “no alternative” kind. It makes no sense to avoid it (I have done a lot of avoiding, as you can perhaps tell – but let me call that “keeping a sane space for human thinking and interaction”). Rather, “it” will take over. The developments are so rapid, the only thing we can do is try to keep up. Are the bots already leading, and humanity is running after them (if they let us)? I happen to think there is no such thing as “Artificial Intelligence”. What we rather have is “Predatory Pattern Recognition”, programmed by human intelligence. Predatory, because whatever online available knowledge LLMs are trained on was produced by someone (except that we now hear increasingly about AI generated published research – sigh). In their answers, AI tools come across as friendly, knowledgeable counterparts (anthromorphization again – making people “feel comfortable” is a big part of the selling point). And how does the program “know”? Could it at least make transparent what data it draws on? I would call this “citing your sources”. In this context, Academia.edu has been in the headlines recently. Many academics use it to make their work more widely known and some, especially those without institutional affiliation, use it as their website. It recently changed its terms of service, pretty much stating that it allows itself to do anything with the data you upload, including using it for LLMs to get trained on. Many academics erased their account. Academia.edu then backpaddled, but it is probably time to move to scholar-controlled online networks.

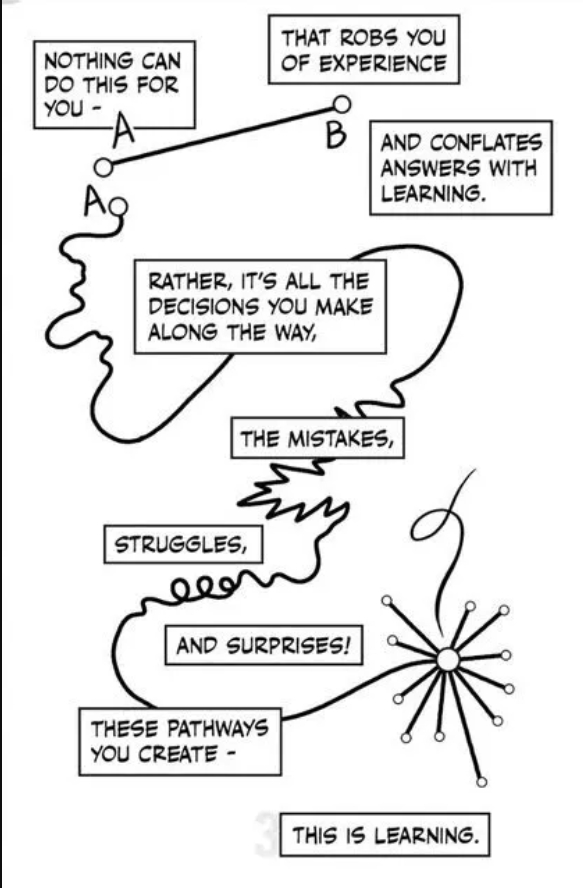

Through a colleague, I found this visual created by Nick Sousanis (very cool guy – check him out, he wrote his dissertation as a comic!). It seems like a good encapsulation of what AI does for us and why it is not a good idea to use it if you are interested in learning to think and keep those thinking muscles in good shape. While time might be saved, having the right answer to a question (outcome) is not the same as learning (process – nonlinear, sometimes painful).

A few final thoughts: First, I have approached the issue from my own experience, and accordingly, inexperience in other fields. These other fields might be more interesting. For example, in ”What if there’s no AGI?” Bryan Macmahon takes a look at the financial implications of the AI hype. He talks about the limits of LLMs that their own creators have been aware of for a long time. But since they still wanted to make money, they lured investors to sink huge sums into the “next internet” – a problem that could result into another economic bubble (the burst of which will harm all of us, not just the investors).

Second, if you want to know how bad AI is for the environment, watch this video conversation by Inside Climate News “Is AI throwing climate change under the bus?”. Admittedly, the question is a bit misguided, as the conversation is about measures to STOP climate change being thrown under the bus, but the short answer is: Yes, exclamation mark! The data centers use tons of electricity and water. Longer answer – it depends on how this demand is going to be satisfied, by fossil fuels or renewables (guess what plans the current US administration has on that). So, how about thinking about AI use as if replacing your nice hybrid, or even electric car with the worst gas-guzzler you can imagine? To make it more normative: do you really not care how much this goes in the wrong direction, beyond the comfort of it all??

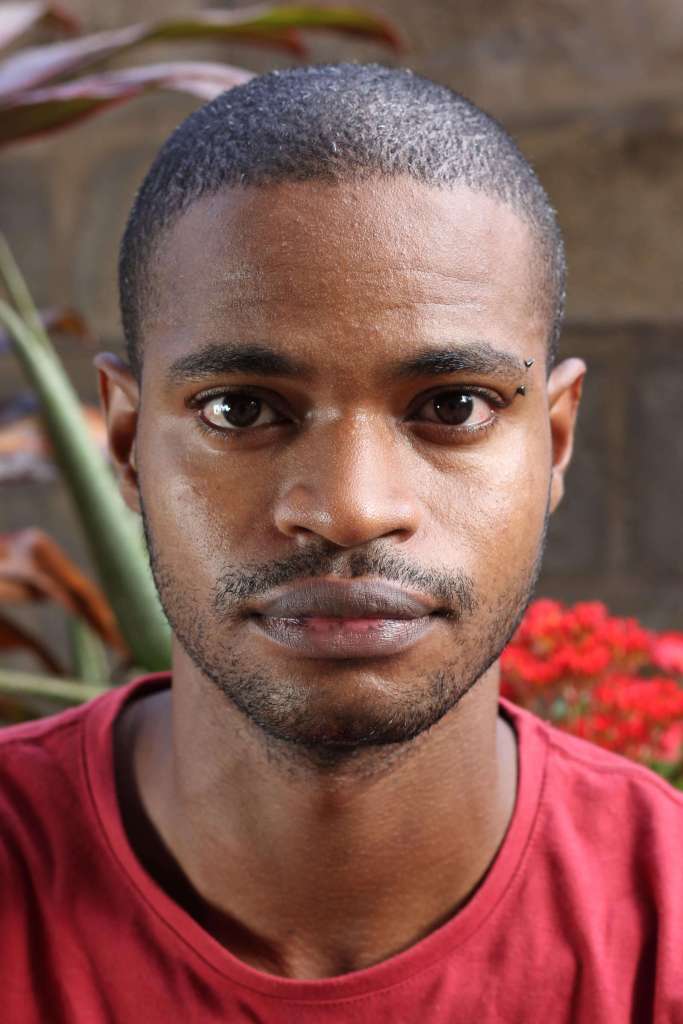

Finally, have I come across anything REALLY good about AI? Yes. When it is used for creativity, as Caribbean visual artist Rodell Warner demonstrates in this post: Brief and Candid Notes on Artificial Archive. He writes about the many limitations of historical photographs taken of Caribbean people, especially people of color. They are depicted as the white elite – who took the photos – saw them: more as labor force and part of the estate equipment than actual humans. Warner uses text-to-image AI to imagine how ordinary 19th century Caribbean people might have looked like in their own image, as humans who show their personality, express feelings, have fun, are portrayed for their uniqueness and beauty – check out these stunning photos. It is an odd experience because the photos deviate so much from what we expect to be shown – humans de-humanized by enslavement and indentureship. I have no idea how this transformation works technically, but it was the first time that I have found AI interesting, enhancing our human imagination.

Where do YOU stand on AI? More on AI and teaching soon.

Liebe Grüße aus Deutschland,

und danke für diesen Artikel!

Hier zu Lande, wird schon überlegt, welche Aufgaben KI für Lehrerinnen übernehmen kann!!! Andrea Weisz

LikeLike